WHAT YOU ABSOLUTELY NEED TO KNOW ABOUT AI ECONOMICS

Ever wonder why some AI services are free while others cost a fortune? Or why tech giants are pouring billions into chips while startups struggle to keep their models running? The answer lies in understanding two critical concepts: training and inference.

This isn't just technical jargon – it's the economic foundation that determines which AI companies will thrive and which will collapse under unsustainable costs. Whether you're building, buying, or just using AI tools, understanding this distinction is your key to making informed decisions in today's AI-powered world.

Training vs. Inference: The Essential Difference

Let's break it down simply:

TRAINING is teaching the AI. It's the expensive, intensive process where models learn from massive datasets. Think of it as sending your AI to a premium ivy league education that costs millions in tuition.

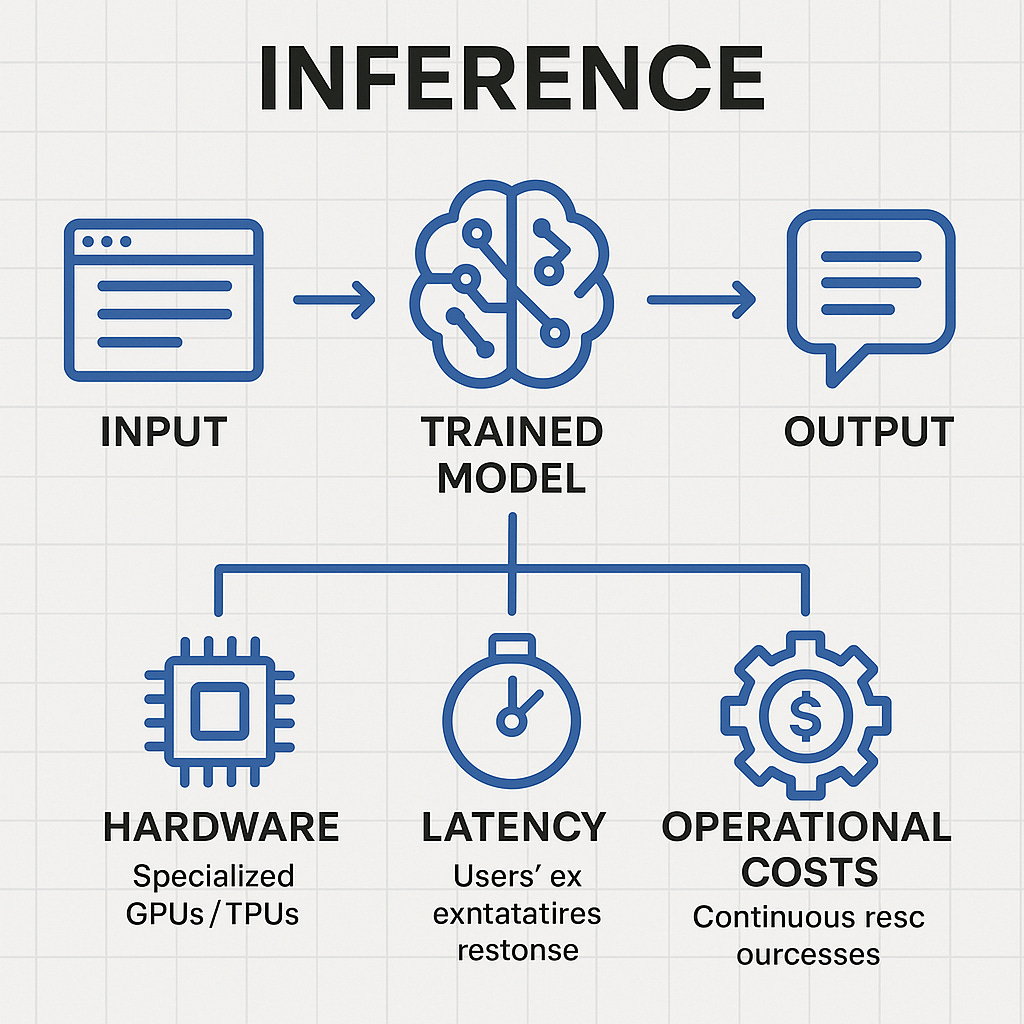

INFERENCE is using the AI. It's when the trained model applies what it learned to answer your questions or perform tasks. This is your AI's working life after graduation, where it earns its keep.

When you ask ChatGPT a question, you're not teaching it anything new – you're just asking it to apply what it already knows. That's inference.

Real-World Example: Instagram's Recommendation Engine

Instagram's content recommendation system provides a perfect illustration of how training and inference work in practice:

Training Phase:

Instagram collects vast amounts of data on user interactions (likes, comments, saves, time spent viewing)

This data feeds large-scale machine learning models that identify patterns in user preferences

Engineers continuously evaluate and fine-tune these models to improve accuracy

This computationally intensive process happens on powerful server clusters, not your phone

Training may incorporate billions of data points across millions of users

Inference Phase:

When you open Instagram, the recommendation engine springs into action

Your current behavior and profile serve as input features for the model

The trained model instantly evaluates thousands of potential content pieces

It ranks candidate posts, Reels, and Stories based on likelihood of engagement

The highest-ranked content appears in your feed or Explore page

Your subsequent behavior (whether you like, scroll past, or linger) feeds back as new data

All this happens in real-time, using minimal computational resources on Instagram's servers

This same pattern applies across virtually all AI-powered recommendation systems, from Netflix suggestions to TikTok's For You Page to Amazon's product recommendations. The expensive, intensive training creates the intelligence, while lightweight, efficient inference delivers personalized experiences in real-time.

Why Does This Matter? Follow The Money!

The economics of these two processes are radically different:

Training Costs: Enormous one-time investments

OpenAI reportedly spent $78-100 million training GPT-4

Google's Gemini Ultra cost an estimated $191 million

Even the relatively modest GPT-3 required $4.6-12 million back in 2020

Inference Costs: Smaller per-use fees that accumulate over time

Companies pay roughly $0.03-0.06 per 1,000 tokens (roughly 750 words) for GPT-4

A company processing 1 billion tokens daily spends $60,000 every single day

At scale, monthly inference costs often exceed the initial training investment

While training requires massive upfront investment, inference costs accumulate continuously over time and can eventually surpass the initial training expenditure, especially for widely used models.

The DeepSeek Controversy: A Lesson in AI Economics

In January 2025, Chinese AI startup DeepSeek created a global stir by claiming they had trained their state-of-the-art model for just $6 million – a fraction of what Western competitors spend. This announcement had an immediate impact on markets, with Nvidia's stock dropping significantly.

However, by early February 2025, this claim had been thoroughly debunked by multiple industry analysts. A comprehensive investigation by SemiAnalysis revealed DeepSeek's actual computing infrastructure was far more substantial than initially suggested. The company actually has access to approximately 50,000 Hopper GPUs, including a mix of H800s, H100s, H20s, and A100s – not merely the 2,048 GPUs they initially claimed.

More accurate estimates place DeepSeek's total infrastructure investment at approximately $1.3 billion when accounting for hardware, R&D, and supporting infrastructure. The $6 million figure likely represented only a specific subset of costs for the V3 base model, not their more advanced R1 model that demonstrated sophisticated reasoning capabilities.

This controversy highlighted the persistent challenges in accurately assessing AI development costs, as companies may report figures selectively based on different accounting methodologies or strategic considerations.

The DeepSeek situation is further complicated by regulatory concerns. Italian privacy regulators recently blocked the app from app stores due to data protection concerns, and U.S. authorities are investigating whether the company gained access to export-restricted NVIDIA H100 GPUs through third-party channels.

The Hidden Economics of AI

When we look deeper into the numbers, fascinating patterns emerge:

For Training:

Hardware represents 47-67% of costs (primarily specialized GPUs/TPUs)

R&D staff expenses account for 29-49% (AI specialists command significant salaries)

Energy consumption makes up the remaining 2-6% (enough electricity to power small towns)

For Inference:

Scale becomes the dominant factor (billions of operations daily)

Latency requirements drive infrastructure costs (users expect instant responses)

Continuous operation necessitates redundancy and reliability investments

The economics of training versus inference creates fundamentally different business models and technical challenges for AI companies.

Technical Innovations Driving Economic Efficiency

These economic pressures are driving rapid innovation on both fronts:

Mixture of Experts (MoE) Architecture:

Products/Examples: Mixtral 8x7B by Mistral AI, DeepSeek-V3 (671B parameters), DBRX by Databricks (132B parameters)

How it works: MoE significantly reduces both training and inference costs by activating only a fraction of model parameters per query. While all parameters need to be loaded in RAM (requiring high VRAM), during inference only a subset of experts are activated for each token, making computation much more efficient.

Benefits: Models using MoE architecture can be "pretrained much faster vs. dense models" and have "faster inference compared to a model with the same number of parameters."

Multi-Head Latent Attention (MLA):

Products/Examples: DeepSeek-V2, DeepSeek-V3, and DeepSeek-R1 models

How it works: MLA creates a compressed version of attention information, dramatically reducing the KV cache size needed for inference by up to 93.3%.

Benefits: DeepSeek's implementation "lowers memory usage to just 5–13% of what the more common MHA architecture consumes," dramatically improving inference efficiency.

Adoption progress: The TransMLA project aims to convert widely used GQA-based models (like LLaMA, Qwen, and Mixtral) into MLA-based models to boost expressiveness without increasing KV cache size.

Specialized Inference Hardware:

Products/Examples: NVIDIA H100/H200, AMD MI300 series, Groq LPU, Cerebras WSE-3, d-Matrix Corsair

How it works: Purpose-built chips optimize specifically for inference workloads rather than training.

Benefits: These specialized chips are "more attuned to the day-to-day running of AI tools and designed to reduce some of the huge computing costs of generative AI."

Company developments: Groq provides "fast AI inference in the cloud and in on-prem AI compute centers" using its Language Processing Unit (LPU) technology, which delivers "instant speed, unparalleled affordability, and energy efficiency at scale." AMD recently released the MI325X chip and claims it has "market leading inference performance," particularly for 70B parameter LLMs.

Edge Computing for Inference:

Products/Examples: NVIDIA Jetson, Google Edge TPU, Qualcomm Cloud AI 100, Apple Neural Engine

How it works: Edge AI runs machine learning tasks directly on interconnected edge devices, processing data close to where it's generated rather than in remote data centers.

Benefits: Processing AI inference at the edge delivers real-time results, enhances privacy by keeping data local, and reduces bandwidth costs by eliminating the need to transmit raw data to cloud servers.

Applications: Common examples include "smartphones, wearable health-monitoring accessories (e.g., smart watches), real-time traffic updates on autonomous vehicles, connected devices and smart appliances."

Algorithmic Breakthroughs: Recent research shows optimized training methods can achieve the same performance as models 4x larger while using significantly less computing power.

If current trends continue without these innovations, the cost of training frontier models would exceed $1 billion by 2027, effectively limiting development to only the most well-funded organizations.

PRACTICAL UNDERSTANDING: THE COST BREAKDOWN

To truly grasp the economics at play, let's look at real numbers for a medium-sized company using AI:

Training a Custom Model

Base compute costs: $250,000 - $1.5 million (depending on model size)

Engineering team (5 specialists for 6 months): $750,000 - $1.25 million

Data acquisition and preparation: $100,000 - $500,000

Infrastructure and support costs: $200,000 - $800,000

Total Training Investment: $1.3M - $4.05M

Running Inference at Scale

Processing 10 million daily requests

Average request: 500 tokens input, 750 tokens output

Using GPT-4 API pricing: ~$6,000 daily or $2.19 million annually

Using GPT-3.5-Turbo: ~$300 daily or $109,500 annually

The stark difference in inference costs between model generations illustrates why many companies opt for slightly less advanced models when capabilities are similar enough.

Most businesses don't need cutting-edge performance for every task. Understanding the cost implications helps companies make rational decisions about which capabilities truly justify premium pricing.

Cost-Saving Strategies That Actually Work

Smart companies are adopting several strategies to balance capabilities with economics:

Quantization: Reducing numerical precision from 32-bit to 8-bit or even 4-bit can slash inference costs by 75% with minimal performance impact.

Caching: Storing responses for common queries eliminates redundant processing, particularly effective for informational AI assistants.

Right-sizing Models: Using smaller, specialized models for specific tasks rather than one massive model for everything.

Hybrid Approaches: Combining rule-based systems with AI for straightforward tasks while reserving complex reasoning for advanced models.

The most successful AI implementations use a layered approach, handling simple tasks with lightweight systems and only deploying resource-intensive models when truly necessary.

RESEARCH SPOTLIGHT: EFFICIENCY REVOLUTION

While much attention focuses on building bigger models, the real revolution is happening in efficiency. Researchers are finding ways to achieve more with less across both training and inference:

Training Efficiency Breakthroughs:

Parameter-Efficient Fine-Tuning: Techniques like LoRA (Low-Rank Adaptation) allow customization of large models by modifying just 0.1% of parameters.

Data Efficiency: Advanced data curation methods achieve better results with 40% less training data.

Transfer Learning: Building on existing models rather than training from scratch reduces costs by 60-90% for specialized applications.

Inference Optimization:

Knowledge Distillation: Training smaller "student" models to mimic larger "teacher" models.

Pruning: Removing unnecessary connections in neural networks without sacrificing performance.

Optimized Transformers: Architectural innovations that maintain capabilities while requiring fewer computational resources.

These advancements are starting to flatten the cost curve that has dominated AI economics, suggesting that performance gains may not necessarily require proportional increases in resources.

THE ENVIRONMENTAL SIDE OF THE EQUATION

The economics of AI isn't just about dollars – it's also about environmental impact. Training a single large language model can emit as much carbon as five cars driven for their entire lifetimes.

This environmental cost is becoming increasingly important to investors, customers, and regulators. Forward-thinking organizations are addressing this challenge through:

Carbon-Aware Computing: Scheduling intensive training during periods of renewable energy abundance.

Efficiency Metrics: Measuring not just performance but performance-per-watt.

Environmental Impact Statements: Publishing carbon footprints alongside model cards.

Many of the efficiency improvements motivated by economic concerns also deliver environmental benefits. This is creating a virtuous cycle where financial and environmental incentives align.

As consumers and enterprises become more environmentally conscious, carbon efficiency is emerging as another competitive differentiator in the AI landscape.

TAKEAWAY: WHAT YOU SHOULD REMEMBER

Understanding the distinction between training and inference isn't just about technical knowledge – it's about making smarter decisions in the AI economy:

For Businesses: Match your AI strategy to your specific needs and resources. Sometimes a smaller, efficient model is better than the cutting edge.

For Investors: Look beyond headline capabilities to examine how companies are managing the economics of both training and inference.

For Consumers: Be aware that "free" AI services must recoup their costs somehow – usually through your data or future monetization.

As we move forward, the companies that will thrive aren't necessarily those with the largest models or the most compute – they're the ones that master the delicate balance between training innovation and inference efficiency.

The AI revolution isn't just about technological capabilities; it's about sustainable economics. And that starts with understanding the critical difference between training and inference.

🌟 Some of our popular newsletter editions

👩💼 Week 22 - How to create a customer persona for your product

🧠 Week 24 - 14 Behavioral Psychology Concepts Product Managers Should Know

📊 Week 27 - 📈 How to Develop and Write KPIs: A Guide for Product Managers 📋

📚 Week 6 - PM 101 (Books, Articles, Podcasts and Newsletters)

✍️ Week 29 - 💡 A Step-by-Step Guide to Crafting Killer 📝 Problem Statements

🤯 Week 16 - 6 Most Effective Problem Prioritization Frameworks for Product Managers - Part 1

🤯 Week 17 - 6 Most Effective Problem Prioritization Frameworks for Product Managers - Part 2