Introduction to A2A

On April 9, 2025, Google unveiled the Agent2Agent (A2A) protocol, an open standard designed to transform how AI agents communicate and collaborate across different platforms and frameworks. This groundbreaking protocol addresses one of the most persistent challenges in enterprise AI adoption: enabling agents built on different frameworks and by different vendors to work together seamlessly. With support from over 50 major technology partners and service providers, A2A promises to create truly interoperable multi-agent systems.

The Problem A2A Solves

Currently, organizations deploy specialized AI agents for different business operations, but these agents typically operate in isolation. As enterprises integrate these systems, enabling them to collaborate effectively in complex environments has become crucial for boosting productivity and unlocking new capabilities.

The fundamental challenge has been the lack of a common mechanism for agents to:

Discover each other

Communicate effectively

Coordinate actions across vendor ecosystems

Consider a typical enterprise scenario: one department might use an AI agent specialized in supply chain management, while another uses a different agent for customer service, and yet another for financial planning. Although these agents could potentially collaborate to solve complex cross-functional problems, the lack of standardized communication protocols has prevented them from working together efficiently.

A2A addresses this gap by providing a standardized approach to agent communication, enabling secure information exchange and collaborative actions between AI agents regardless of their underlying frameworks or vendors.

What is A2A and Why Does It Matter?

Google's Agent2Agent (A2A) protocol is an open communication standard designed to enable independent AI agents to interoperate seamlessly. Released on April 9, 2025, A2A addresses the fragmentation problem in the AI agent landscape by providing a standardized way for AI agents to communicate and collaborate, even if they were built by different developers, use different frameworks, or run on different platforms.

The Problem A2A Addresses

Organizations today are deploying a variety of specialized AI agents—for customer support, data analysis, HR functions, and more—often built by different vendors with proprietary APIs and mismatched data formats. This siloed approach severely limits the potential of "agentic AI," where multiple agents could collaborate to solve complex, multi-step problems.

Without a common protocol, your sales AI can't easily communicate with your finance AI, your customer service agent can't coordinate with your inventory management system, and so forth. This creates information silos that limit collective potential and forces organizations to either:

Invest in custom integration work (expensive and brittle)

Accept the constraints of siloed agent capabilities (inefficient)

Why A2A Matters

A2A unlocks a dynamic multi-agent ecosystem by standardizing how agents converse and cooperate. By providing a universal "language" for agents to interact, it allows an organization's AI agents to collaborate across previously siloed data and applications.

For example:

An HR recruiting agent could query an internal knowledge base agent for candidate information

A customer service agent could delegate billing questions to a specialized finance agent

A planning agent could collaborate with specialized agents for different aspects of a complex project

This interoperability "will increase autonomy and multiply productivity gains, while lowering long-term costs" by allowing organizations to mix and match the best agents for each job rather than being locked into a single vendor's ecosystem.

Industry Support and Open Standard

A2A is significant because it's both open-source and backed by industry consensus. Google introduced A2A in collaboration with over 50 partner companies, including major tech providers (Atlassian, Box, Cohere, Intuit, MongoDB, PayPal, Salesforce, SAP, ServiceNow, Workday), AI startups (like LangChain), and system integrators.

This broad support means A2A isn't just a Google-internal tool—it's being shaped as a universal protocol for agent communication, with a shared vision of cross-vendor interoperability. This industry momentum could make A2A a de facto standard for agent interactions, much like HTTP became standard for web services.

The timing reflects an inflection point: AI agents are becoming capable enough to handle autonomous tasks, but without a common protocol, their usefulness is limited to narrow contexts. A2A is designed to fill this gap, complementing other emerging standards like Anthropic's Model Context Protocol (MCP).

In summary, A2A is to AI agents what APIs are to web services—a lingua franca for interaction. It matters because it promises greater flexibility for builders, reduced integration headaches, and ultimately more intelligent, cohesive AI-driven products.

Key Features and Benefits of A2A

Google's A2A protocol includes thoughtful design features that make agent collaboration practical in real-world scenarios:

🌐 Standardized Communication

A2A provides a standard message-passing format for agents built on familiar web standards—HTTP for transport, JSON (with JSON-RPC 2.0 conventions) for structured messages, and Server-Sent Events for streaming updates. By building on existing standards, A2A integrates easily into modern tech stacks and avoids reinventing the wheel. Developers can use standard HTTP requests and JSON formatting, significantly lowering the barrier to adoption.

🤝 Vendor & Framework Agnostic

The protocol is explicitly designed to work across different frameworks or vendors. An agent written with Google's own Vertex AI Agent Development Kit (ADK) or one built on an open-source library like LangChain can both speak A2A and understand each other. This vendor-neutral approach prevents lock-in and lets you mix and match AI agents from various providers. For example, you could use a document analysis agent from Vendor A with a chatbot agent from Vendor B—as long as both implement A2A, they will communicate seamlessly.

🗣️ Clear Message Structure

Each A2A interaction is modeled as a conversation with structured messages. Messages have a defined schema including a role (e.g., "user" or "agent"), a unique ID, and one or more content "parts." A part can be text, binary file data, or structured JSON data. This rich message structure makes conversations easier to track and debug than ad-hoc JSON or plain text. In practice, this means an agent can send not just a text reply, but also attach a file or a JSON payload in a standardized way.

🧩 Capability Discovery via Agent Cards

A2A introduces "Agent Cards," which are public JSON files (typically hosted at a well-known URL like /.well-known/agent.json) that describe an agent's capabilities, skills, and authentication requirements. This works like a metadata profile for the agent. Other agents can fetch an Agent Card to discover what the agent can do and how to talk to it—for example, what "skills" or functions it offers, and where its A2A endpoint is. This enables a kind of service discovery for AI agents, so agents can find the right collaborator for a given task.

⚙️ Standardized Function Calls (Skills)

Building on capability discovery, A2A allows agents to expose their functions or skills in a uniform way, and other agents to invoke those functions through the protocol. For example, an agent's capabilities might include "Translate text" or "Schedule a meeting." Another agent can call these skills via A2A without needing custom API code for each skill. This standardized function calling means specialized agents can act like modular components—any other agent can use their capability if needed.

🔄 Conversation Threading & Context

A2A is designed for multi-turn interactions and long-running conversations between agents. It supports conversation threading through unique message IDs and optional parent IDs that tie messages together in a dialogue thread. There's also a notion of a Task—a unit of work tracked by a unique Task ID and a state machine (e.g., submitted, working, input-required, completed). Together, these allow agents to maintain context over multiple exchanges, ask clarifying questions, or pause for input and then resume.

⏱️ Support for Long-Running Tasks

Not all agent tasks finish in seconds—some might involve extensive computation or human approval steps, taking minutes or hours. A2A is built with this in mind and supports asynchronous operation and real-time progress updates for long-running tasks. Specifically, an agent can offer a streaming mode using Server-Sent Events: the client agent can subscribe to a task and receive live updates (like partial results or status changes) as the remote agent works. There's also a mechanism for push notifications—the remote agent can send callbacks to a client-provided URL when significant events occur.

🛡️ Secure by Design

In enterprise settings, security and permissions are paramount. A2A is secured by default, supporting enterprise-grade authentication and authorization mechanisms. It aligns with OpenAPI auth schemes out of the box, so you can apply familiar methods like API keys, OAuth tokens, service accounts, etc., to control which agents can communicate and what they can access. All communication happens over HTTPS, and agents can be sandboxed. This allows businesses to enable sensitive systems to interface via A2A with confidence that security policies are enforced.

🎨 Modality-Agnostic (Beyond Text)

While many AI agents today communicate via text, A2A is built to handle multiple content modalities. Thanks to the "parts" system in messages, agents can exchange not just text but also images, audio/video streams, or structured data forms. For example, an agent could send an image or even start a live audio stream as part of a message. The protocol allows agents to negotiate the format and UI capabilities—e.g., if one agent can display rich media or needs a text fallback. This futureproofs the protocol for emerging use cases like agents with vision or speech capabilities.

✅ Consistent Error and State Handling

A2A defines a standard way to handle errors and statuses. Instead of each agent inventing its own error codes and messages, A2A has a unified error schema (similar to standard HTTP codes) and task states. If something goes wrong or an agent cannot fulfill a request, it responds in a predictable A2A format. This greatly simplifies debugging multi-agent interactions.

📡 Open Source & Community-Driven

A key feature of A2A is that it's completely open source (under Apache 2.0 license) and welcomes community contribution. Google has published the A2A spec as a draft on GitHub along with reference implementations and samples in multiple languages. This means anyone can implement or suggest improvements to the protocol, and the design will evolve with feedback from real-world developers.

In summary, A2A's feature set transforms multi-agent systems into plug-and-play components. Agents advertise what they can do, connect through structured dialogue, exchange rich information, and handle complex tasks hand-in-hand—all with security, standardization, and community support built in. The benefits include faster integration, improved reliability, and the ability to leverage best-of-breed AI capabilities from anywhere.

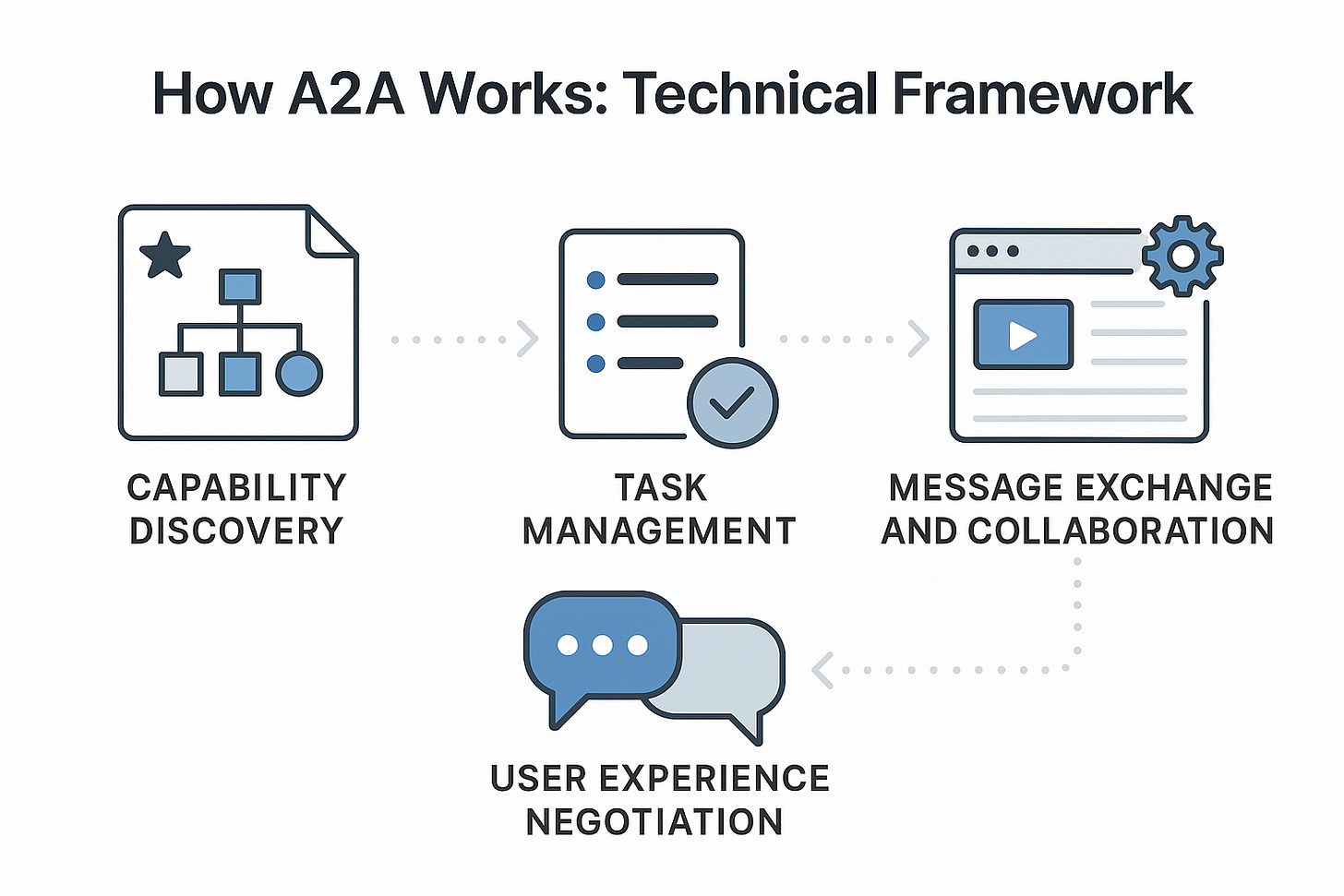

How A2A Works: Technical Framework

At its core, the A2A protocol establishes a standardized way for what Google calls "opaque agents" to work together while respecting organizational boundaries. Unlike systems that require agents to share internal resources, A2A enables collaboration without requiring agents to expose their internal reasoning or memory.

The protocol facilitates communication between a "client agent" and a "remote agent":

The client agent gathers and conveys tasks from the end user

The remote agent executes these tasks

This interaction involves several key capabilities:

1. Capability Discovery

Agents advertise their capabilities through "Agent Cards" formatted in JSON, typically located at a standardized endpoint (/.well-known/agent.json). These cards contain metadata about the agent's skills, endpoint URL, and authentication requirements, allowing client agents to identify the most suitable remote agent for specific tasks.

2. Task Management

Communication between agents is task-oriented, with the protocol defining "task" objects and their lifecycle. Tasks can be completed immediately or tracked through synchronous communication between agents for long-running processes. The protocol supports various task states including "submitted," "working," "input-required," "completed," "failed," and "canceled".

3. Message Exchange and Collaboration

Agents can exchange messages regarding context, replies, artifacts (the output of tasks), and user instructions. Each message contains "parts," which are complete data blocks of a specific content type (e.g., text, generated images, forms).

4. User Experience Negotiation

Client and remote agents can negotiate the correct format required for user interface capabilities. This includes support for different modalities such as text, iframes, videos, and web forms.

The protocol is built on popular existing standards such as HTTP, Server-Sent Events (SSE), and JSON-RPC, which significantly reduces implementation barriers for enterprises.

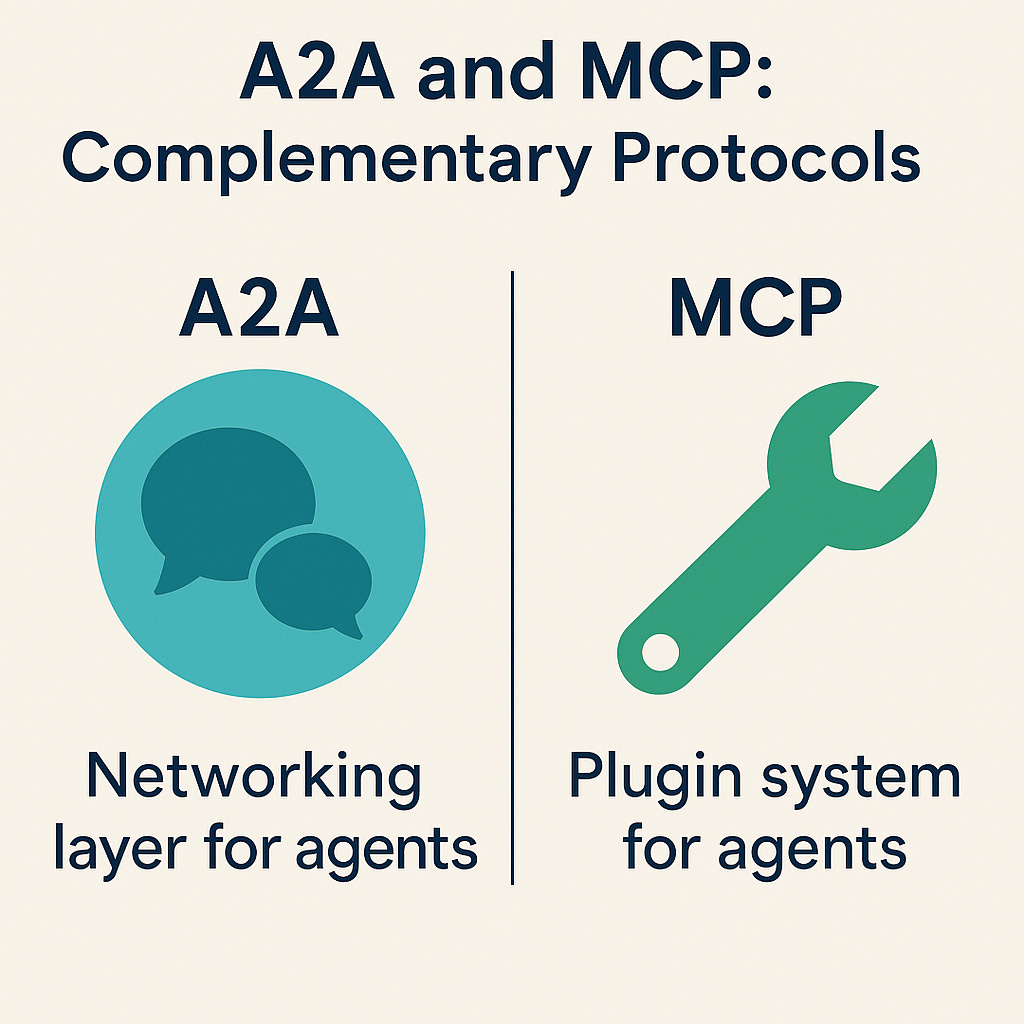

A2A and MCP: Complementary Protocols

To understand A2A's role in the broader AI ecosystem, it's important to recognize its relationship with Anthropic's Model Context Protocol (MCP), which has gained significant traction since its launch in November 2024.

Rather than competing, these protocols serve complementary functions:

Model Context Protocol (MCP) functions essentially as a plugin system for agents, making individual AI agents smarter by giving them tools, context, and structured access to data.

A2A serves as a networking layer for agents, helping them get smarter through collaboration by providing a shared language and secure channel for inter-agent communication.

Google provides an analogy: if MCP is the socket wrench, A2A is the conversation between mechanics as they diagnose a problem.

Google explicitly positions A2A as a complement to MCP, designed to address challenges identified in deploying large-scale, multi-agent systems.

Partner Ecosystem and Industry Support

One of the most significant aspects of the A2A protocol launch is the extensive partner ecosystem supporting the initiative. Google has garnered support from over 50 technology partners and leading service providers, signaling broad industry interest in agent interoperability.

The technology partners include major players such as Atlassian, Box, Cohere, Intuit, Langchain, MongoDB, PayPal, Salesforce, SAP, ServiceNow, UKG, and Workday.

Additionally, leading consulting and system integration firms have joined the initiative, including Accenture, BCG, Capgemini, Cognizant, Deloitte, HCLTech, Infosys, KPMG, McKinsey, PwC, TCS, and Wipro.

Implementing A2A: Getting Started

For organizations and developers interested in implementing A2A, Google has provided comprehensive resources to facilitate adoption:

Getting Started with A2A

The A2A protocol is available as an open-source project on GitHub, with a complete draft specification and code examples accessible on the official A2A website. Google has planned a production-ready version for release later in 2025, but organizations can begin exploring and implementing A2A using the current specification.

Implementation Approaches

Implementing A2A involves several key components:

Agent Card Creation: Defining a JSON-formatted Agent Card that describes the agent's capabilities

A2A Server Implementation: Developing an HTTP endpoint that implements the A2A protocol methods

A2A Client Development: Creating applications or agents that can consume A2A services

Authentication and Security: Implementing enterprise-grade authentication mechanisms

Google has provided SDKs, example applications, and sample agents for popular frameworks like Google's ADK, LangGraph, CrewAI, and Genkit to accelerate development.

Future Implications and Industry Impact

The introduction of A2A represents a significant milestone in the evolution of AI systems, with far-reaching implications:

Accelerating the Agent Ecosystem

If A2A gains widespread adoption – and the early support suggests it might – it could accelerate the agent ecosystem similarly to how Kubernetes standardized cloud-native applications or how OAuth streamlined secure access across platforms.

This standardization could drive innovation by allowing developers to focus on creating specialized, high-quality agents rather than solving interoperability challenges.

Enabling Complex Multi-Agent Systems

A2A enables the development of more sophisticated multi-agent systems that can tackle complex, multifaceted problems. By facilitating collaboration between specialized agents, each with its unique capabilities and access to specific data sources, A2A makes it possible to create systems that exceed the capabilities of any single agent.

Vendor-Agnostic AI Strategies

The open nature of A2A supports vendor-agnostic AI strategies, allowing organizations to select the best agents for their specific needs without being locked into a single provider's ecosystem.

Limitations and Challenges

While A2A offers significant benefits, it's important to be aware of potential challenges:

Early Stage & Maturity: As a newly released protocol, A2A is still evolving and best practices are emerging.

Adoption & Network Effects: The value of A2A increases with wider adoption across the industry.

Security and Trust Management: Organizations must carefully manage which agents can connect to their systems.

Learning Curve: Building systems of interoperating agents requires a different design mindset compared to single AI model integrations.

Resources for Building and Learning About A2A

Here's a comprehensive list of resources with actual links to help you start building with and learning about Google's Agent2Agent (A2A) protocol:

Official Documentation and Specifications

📖 A2A Protocol GitHub Repository

Main Repository: https://github.com/google/A2A This is the central hub for all documentation, JSON schemas, and sample code for the A2A protocol. It contains the complete specification and resources to help developers understand and implement the protocol.

💻 Official A2A Website

Documentation Hub: https://google.github.io/A2A/ This site serves as the official documentation hub described as "An open protocol enabling communication and interoperability between opaque agentic applications."

🔍 A2A Announcement Post

Google Developers Blog: https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/ This is the official announcement that provides an overview of the vision, design principles, and examples straight from Google's team.

📋 A2A JSON Schema Specification

Schema Definition: https://github.com/google/A2A/blob/main/specification/json/a2a.json This file contains the detailed JSON schema that defines the A2A protocol structure, including agent authentication, capabilities, messages, and more.

Sample Code and Implementation Resources

🧩 Python A2A Samples

Official Python Samples: https://github.com/google/A2A/tree/main/samples/python This directory contains sample agents written in multiple frameworks that perform example tasks with tools, including common code used to speak A2A over HTTP.

🐍 ADK Sample Agent

Expense Reimbursement Agent: https://github.com/google/A2A/blob/main/samples/python/agents/google_adk/README.md This sample uses the Agent Development Kit (ADK) to create a simple "Expense Reimbursement" agent that demonstrates handling forms and multi-turn interactions.

🌐 Python A2A Library

Community Python Library: https://github.com/themanojdesai/python-a2a This is a community library for implementing Google's A2A protocol in Python, providing high-level abstractions that make it easier to build A2A-compatible agents.

Integration with Existing Frameworks

🔌 Awesome A2A Collection

Repository of A2A Implementations: https://github.com/pab1it0/awesome-a2a This is a curated list of A2A-compliant server implementations that helps developers build interoperable AI agent systems, with categorized examples for different use cases.

☁️ Google Cloud Integration

Python Docs Samples: https://github.com/GoogleCloudPlatform/python-docs-samples This repository contains code samples used on Google Cloud, which may include examples relevant to A2A integration with Google Cloud services.

Community Resources

💬 Hacker News Discussion

A2A Protocol Thread: https://news.ycombinator.com/item?id=43631381 This discussion provides community perspectives on how A2A compares with Anthropic's Model Context Protocol (MCP) and its positioning in the AI ecosystem.

🤝 MCP Integration

MCP Python SDK: https://github.com/modelcontextprotocol/python-sdk The official Python SDK for Model Context Protocol (MCP) servers and clients, which complements A2A for building comprehensive agent systems.

Additional AI Agent Resources

🤖 Awesome AI Agents

Curated List: https://github.com/e2b-dev/awesome-ai-agents A comprehensive list of AI autonomous agents that may provide inspiration or complementary tools for your A2A implementation.

📊 AgentOps Monitoring

Python SDK: https://github.com/AgentOps-AI/agentops A Python SDK for AI agent monitoring, cost tracking, and benchmarking that integrates with multiple agent frameworks, potentially useful for A2A systems.

🤗 Other Agent Frameworks

OpenAI Agents Python: https://github.com/openai/openai-agents-python A lightweight framework for multi-agent workflows from OpenAI that provides an alternative perspective on agent architecture.

Getting Started Guide

To get started with A2A development:

First, explore the official A2A website to understand the core concepts and architecture.

Clone the A2A GitHub repository to access examples and specifications.

Run one of the sample agents from the Python samples directory to see A2A in action.

Consider using the community Python A2A library to simplify your implementation.

Join discussions on GitHub Issues to connect with the community and stay updated on protocol developments.

Conclusion

Google's Agent2Agent (A2A) protocol represents a significant advancement in the field of AI, addressing the critical challenge of agent interoperability. By providing a standardized way for AI agents to communicate and collaborate across different platforms and frameworks, A2A enables more powerful, flexible, and effective multi-agent systems.

With strong backing from major technology partners and an open-source approach that encourages community participation, A2A has the potential to transform the AI landscape, accelerating the development of sophisticated agent ecosystems and enabling new applications across various industries.

As the protocol moves toward a production-ready release later in 2025, organizations have the opportunity to begin exploring and implementing A2A, positioning themselves at the forefront of this exciting new era in agent interoperability and collaborative AI.

🌟 Some of our popular newsletter editions

👩💼 Week 22 - How to create a customer persona for your product

🧠 Week 24 - 14 Behavioral Psychology Concepts Product Managers Should Know

📊 Week 27 - 📈 How to Develop and Write KPIs: A Guide for Product Managers 📋

📚 Week 6 - PM 101 (Books, Articles, Podcasts and Newsletters)

✍️ Week 29 - 💡 A Step-by-Step Guide to Crafting Killer 📝 Problem Statements

🤯 Week 16 - 6 Most Effective Problem Prioritization Frameworks for Product Managers - Part 1

🤯 Week 17 - 6 Most Effective Problem Prioritization Frameworks for Product Managers - Part 2