17 Battle-Tested AI Prompts That Turn Raw Feedback Into Revenue-Driving Features

Table of Contents

Introduction

The High Cost of Untapped Feedback

Why AI-Powered Synthesis is the Competitive Edge

Part 1: Understanding Your Feedback Sources & Synthesis Potential

Support Tickets & Customer Service Interactions

User Interviews & Usability Tests

Surveys & Structured Feedback

App Store Reviews & Online Forums

Analytics & Behavioral Data

AI Model Selection Guide

Part 2: The Master Synthesis Workflow

Stage 1: Data Collection & Preparation

Phase 2: Initial AI Analysis

Source-Specific Summarization Prompts

Cross-Source Pattern Recognition

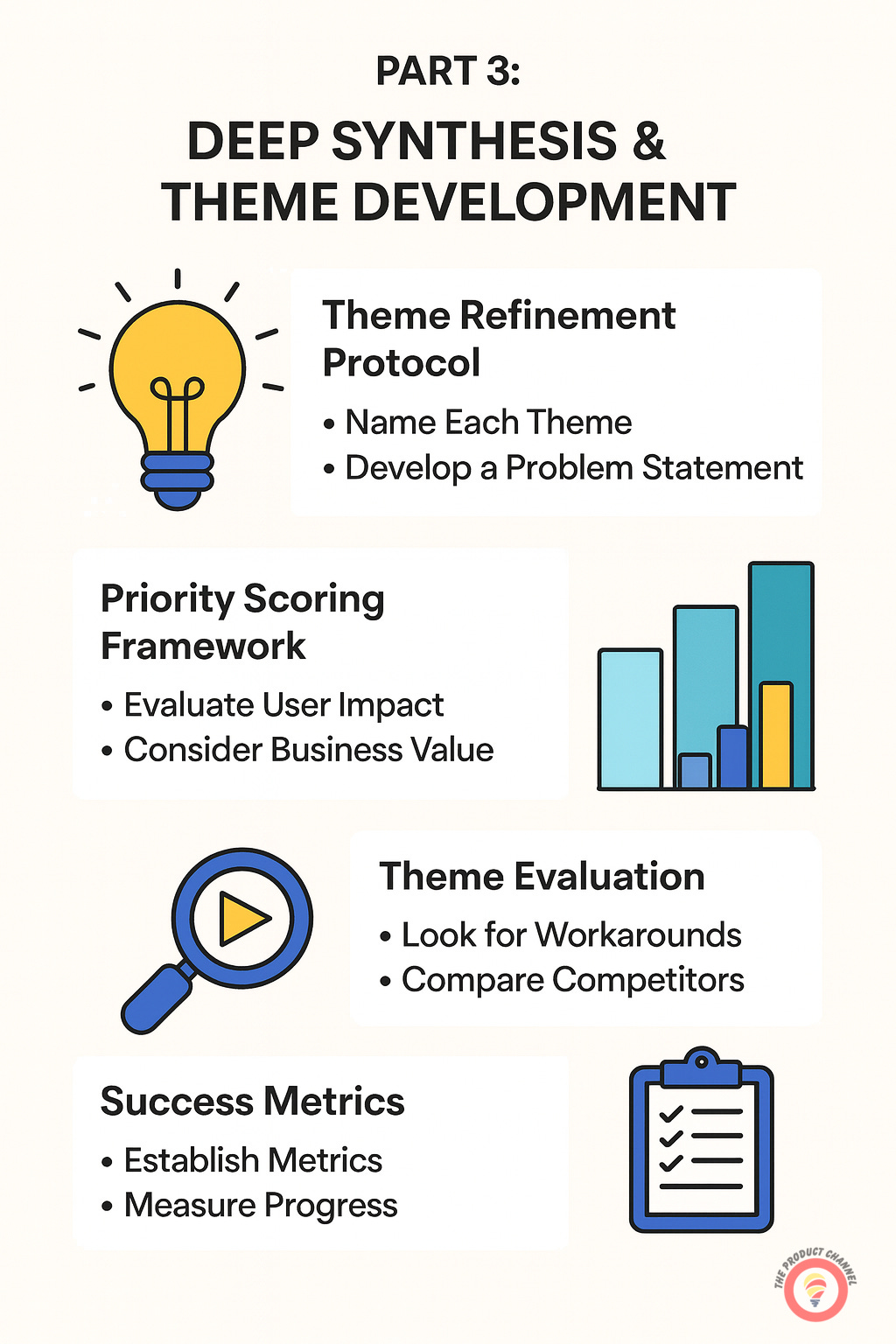

🔒 Part 3: Deep Synthesis & Theme Development

Theme Refinement Protocol

Priority Scoring Framework

🔒 Part 4: Validation & Verification

AI Hallucination Check

Stakeholder Validation Framework

🔒 Part 5: Actionable Output Creation

Executive Summary Generation

Development-Ready Documentation

🔒 Part 6: Advanced Synthesis Techniques

Predictive Theme Analysis

Competitive Intelligence Synthesis

Multi-Modal Analysis

Sentiment Evolution Tracking

🔒 Part 7: Visualization & Communication Templates

Executive Dashboard Template

Stakeholder Communication Templates

Visual Synthesis Formats (Journey Maps, Priority Matrix, Theme Timeline)

🔒 Part 8: Common Pitfalls & How to Avoid Them

Hallucination Trap

Sampling Bias Blindness

Over-Automation Syndrome

Analysis Paralysis

Tool Sprawl

Over-Categorization Trap

Echo Chamber Effect

🔒 Part 9: Measuring Success & ROI

Key Performance Indicators (Efficiency, Quality, Business Impact)

ROI Calculation Framework

Success Tracking Template

🔒 Part 10: Future-Proofing Your Synthesis Process

Building Organizational Capabilities (Individual → Team → Org)

Continuous Improvement Framework

🔒 Part 11: Advanced Techniques That 10x Your Results

Segment Sniper

Time Machine Method

Contradiction Hunter

Correlation Compass

The $100,000 Problem Hiding in Your Feedback Backlog

Every week, your product generates thousands of data points: support tickets pile up, survey responses flood in, app reviews accumulate, and user interview transcripts gather digital dust. McKinsey research shows the average product team spends 40% of its time on data analysis, yet 73% of customer insights never make it into product decisions.

Here's the costly reality: while you're manually sorting through feedback, competitors using AI-powered synthesis are shipping features 5x faster and achieving 40% higher product-market fit scores. Teams report saving $21,000 annually per person through AI adoption, with some reducing research synthesis time from weeks to hours.

This comprehensive playbook will show you exactly how to leverage AI tools like ChatGPT, Claude, Gemini, and specialized platforms to transform scattered feedback into crystal-clear product priorities. Whether you're a solo founder or leading a product team at scale, you'll learn the exact workflows, prompts, and strategies that top-performing teams use to maintain customer obsession at startup speed.

Here's a feedback dataset from a hypothetical company, "FlowTrackr," that you can use to test the prompts in this playbook before applying them to your actual data:

FlowTrackr_Complete_Feedback_Dataset.xlsx

Part 1: Understanding Your Feedback Sources & Synthesis Potential

The Modern Feedback Ecosystem

Product teams today collect feedback across an unprecedented number of channels. Understanding the unique characteristics and synthesis potential of each source is critical for effective AI implementation.

Support Tickets & Customer Service Interactions

Volume: 100-10,000+ monthly

AI Synthesis Potential: Excellent for pattern detection, automatic categorization, urgency scoring

Key Insight Types: Immediate pain points, workflow friction, feature confusion

Best AI Approach: Sentiment analysis + topic clustering + priority scoring

User Interviews & Usability Tests

Volume: 5-50 per quarter

AI Synthesis Potential: Superior for emotional context, motivation extraction, quote mining

Key Insight Types: Deep user psychology, job-to-be-done, unmet needs

Best AI Approach: Transcript analysis + thematic coding + journey mapping

Surveys & Structured Feedback

Volume: 100-10,000+ responses

AI Synthesis Potential: Ideal for statistical confidence, trend identification

Key Insight Types: Quantified preferences, satisfaction drivers, feature priorities

Best AI Approach: Open-text analysis + correlation detection + segmentation

App Store Reviews & Online Forums

Volume: Continuous stream

AI Synthesis Potential: Excellent for unfiltered sentiment, competitive intelligence

Key Insight Types: Public perception, viral issues, feature requests

Best AI Approach: Real-time monitoring + sentiment tracking + competitive analysis

Analytics & Behavioral Data

Volume: Millions of events

AI Synthesis Potential: Powerful when combined with qualitative feedback

Key Insight Types: Usage patterns, feature adoption, user flows

Best AI Approach: Correlation analysis + predictive modeling + cohort analysis

AI Model Selection Guide for Feedback Synthesis

Before we dive into other sections where we explore different prompts for various situations, here is a guide to help you select the right AI model for different feedback synthesis use cases:

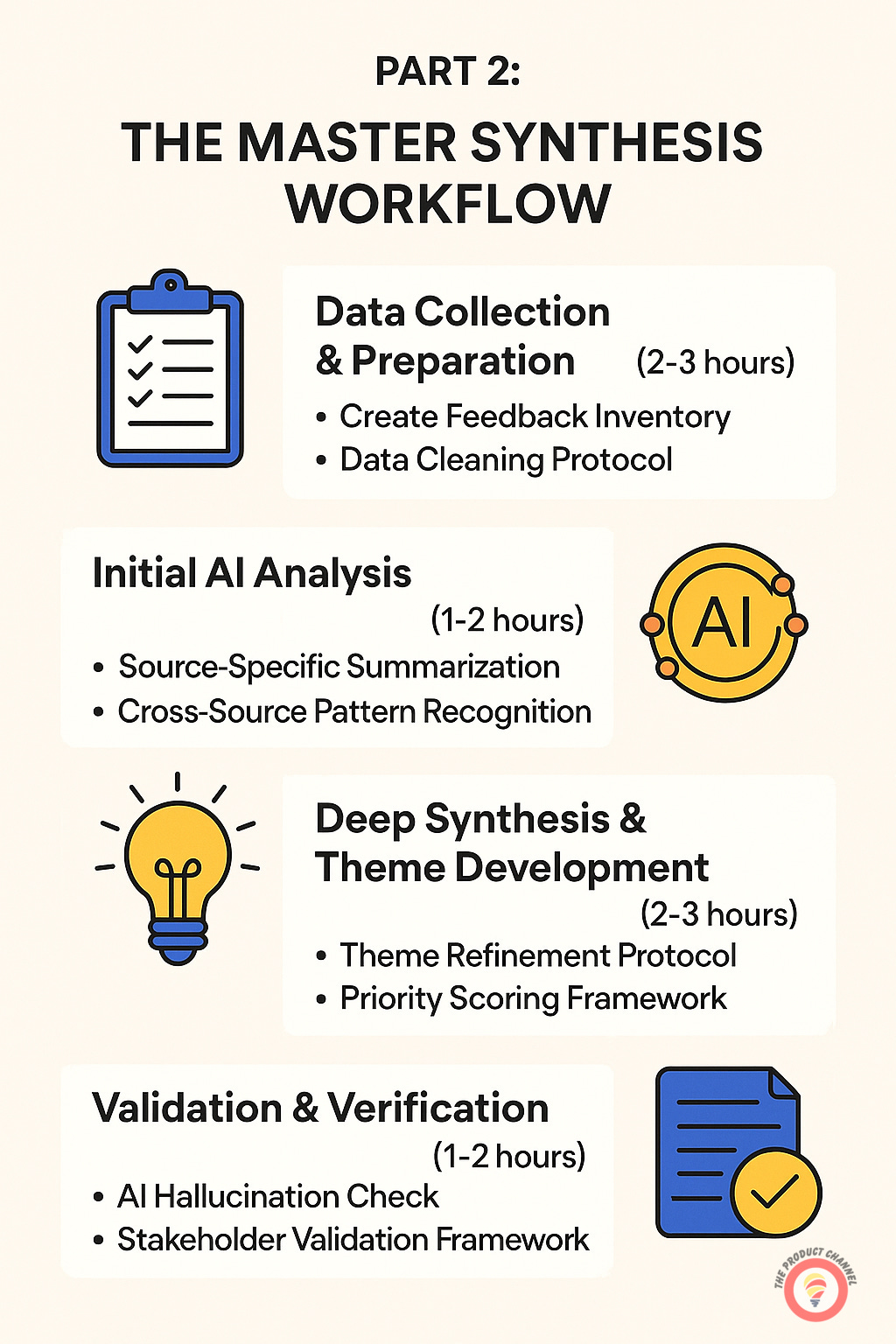

Part 2: The Master Synthesis Workflow

Data Collection & Preparation (2-3 hours)

1.1: Create Your Feedback Inventory

□ Export last 90 days of support tickets

□ Compile recent user interview transcripts

□ Download survey responses (CSV format)

□ Scrape app store reviews (last 200-500)

□ Export NPS comments and CSAT feedback

□ Gather sales team's "reasons for churn"

□ Pull customer success "feature requests"

1.2: Data Cleaning Protocol

Remove all PII (names, emails, company names)

Standardize date formats (YYYY-MM-DD)

Convert all files to UTF-8 encoding

Create source attribution tags

Split mega-files into 50-100 item chunks

1.3: Create Analysis Framework

Your data structure should look like:

feedback_id | source | date | user_segment | text | sentiment | category 001 | survey | 2024-01-15 | enterprise | "Login is too slow" | negative | performance

Initial AI Analysis (1-2 hours)

2.1: Source-Specific Summarization

This is where 90% of PMs fail. They use generic prompts and get generic insights.

Here's my battle-tested prompt structure that actually works:

# USER INTERVIEW ANALYSIS REPORT

## Interviewee: [Full Name]

## Executive Summary

[Brief overview of key findings - 3-4 sentences maximum]

## Top Pain Point Themes

1. [Theme Name] - [Total Mentions]

* Description: [Theme description]

* Pain Points: [List of pain points in this theme]

2. [Theme Name] - [Total Mentions]

...

3. [Theme Name] - [Total Mentions]

...

## Detailed Pain Point Breakdown

### Theme: [Theme Name] ([X] total mentions)

Description: [Brief theme description]

#### Pain Point: [Pain Point Name] - Mentioned [X] times

* Description: [Brief description of the specific pain point]

* Supporting Quotes:

* "[Direct quote from transcript]" - [Interviewee Name]

* "[Direct quote from transcript]" - [Interviewee Name]

#### Pain Point: [Pain Point Name] - Mentioned [X] times

...

### Theme: [Theme Name] ([X] total mentions)

...Support Ticket Analysis Prompt:

You are a product analyst reviewing customer support tickets.

Context: [Product name and brief description]

Data: [Paste 50-100 support tickets]

Analyze and provide:

1. Top 5 issue categories with frequency counts

2. Severity breakdown (critical/high/medium/low)

3. Resolution time patterns

4. Common user confusion points

5. Feature gaps indicated by workaround requests

6. Direct quotes illustrating key pain points

7. Quick-fix opportunities vs. structural problems

Format as a structured report with actionable recommendations for Product and Engineering teams.App Store/Online Review Analysis Prompt:

You are a product insights specialist analyzing public reviews.

Context: [Product name, category, and target audience]

Data: [Paste 50-100 reviews]

Analyze and provide:

1. Star rating distribution and trends over time

2. Primary positive themes with representative quotes

3. Primary negative themes with representative quotes

4. Competitor comparisons mentioned

5. Feature requests (explicit and implicit)

6. User segments providing feedback (if identifiable)

7. Critical bugs or technical issues reported

Format as a structured report that compares our standing against competitors and identifies immediate action items.

Analytics Data Analysis Prompt:

You are a product analytics expert interpreting user behavior data.

Context: [Product name, key features, and typical user flow]

Data: [Paste key metrics or analytics export]

Analyze and provide:

1. User journey bottlenecks (high drop-off points)

2. Feature adoption rates and trends

3. User segments with distinct usage patterns

4. Correlation between feature usage and retention

5. Anomalies or unexpected behavior patterns

6. Growth opportunities based on usage trends

7. Recommended A/B tests to improve metrics

Format as a data-driven report with clear visualizations described and actionable insights for the product team.2.2: Cross-Source Pattern Recognition

After individual analysis, combine insights:

I've analyzed feedback from multiple sources about [product]. Here are the summaries:

Survey Summary: [Paste]

Interview Summary: [Paste]

Support Ticket Summary: [Paste]

Review Summary: [Paste]

Identify:

1. Themes that appear across 2+ sources (with source breakdown)

2. Conflicts or contradictions between sources

3. User segment differences in feedback patterns

4. Temporal patterns (issues getting better/worse over time)

5. Root causes connecting surface-level complaints

6. Priority matrix: Impact (1-10) x Frequency (%) x Feasibility (1-10)

Create a unified problem statement for each major theme.Part 3: Deep Synthesis & Theme Development (2-3 hours)

Keep reading with a 7-day free trial

Subscribe to The Product Channel By Sid Saladi to keep reading this post and get 7 days of free access to the full post archives.